9 more ways to get better results with AI

Advanced tips for collaborating with LLMs.

Readers seemed to find my previous newsletter 11 ways to get better results with AI useful, so here is a part 2.

If you haven’t already read the previous newsletter, I’d suggest starting there and then coming back to this one for more in-depth and advanced tips.

Let’s get into it.

12. Get it to break down the problem first

Small tasks such as summarising an email conversation are easy for LLMs to do in a single query, but they can struggle to solve a larger problem in one go.

When “the OG prompt engineer” Sander Schulhoff was on Lenny’s podcast, one of his biggest tips was to ask the LLM not to answer your query, but to break it down into sub-problems it needs to solve first.

You might ask something like:

I need your help to [do some kind of complex task].

Please can you start by breaking this down into a set of sub-problems and sub-tasks that we need to work through to get to the outcome?You would then refine the list of sub-tasks and then work through them one at a time, providing feedback and iterating along the way.

There’s a secondary benefit to this for tasks you are less familiar with. As Hilary Gridley (who you should follow) writes about in you have to understand the job...

If you have a good grasp on the underlying process, the degree to which the AI can help you is amazing. If you don’t, you are likely producing a facsimile of actual good work.

Breaking down a problem into discrete parts not only gets you better results for complex tasks, but also helps you work through problems that are outside of your comfort zone.

13. Have it critique itself

Once you’ve gotten an LLM to solve a problem for you, you can ask it to critique itself. Then when it shares its review of its own work, you can get it to implement its own recommendations.

Review and critique what you've written above. Then refine your output based on your recommendations.This is particularly good for more complex analysis tasks, where you need to be sure that your facts are correct.

14. Only use roles for expressive tasks

Another tip from Sander Schulhoff is that role prompting (e.g. “You are an expert in medieval poetry with over 30 years of experience”) doesn’t yield any performance boost to many queries with today’s models.

It works for expressive tasks like writing or summarising, e.g. “Rewrite this as if you are a McKinsey consultant”.

However giving the LLM a relevant role doesn’t provide any benefit for accuracy-based tasks such as categorising data.

15. The more examples, the better

One of the key points in my previous newsletter was that giving the AI more input leads to better output. One important element of this input is examples.

Just like when you give a task to a human, providing AI with an example of the output you’re looking for can dramatically improve the quality of the results.

And the more examples you can provide, the better the results.

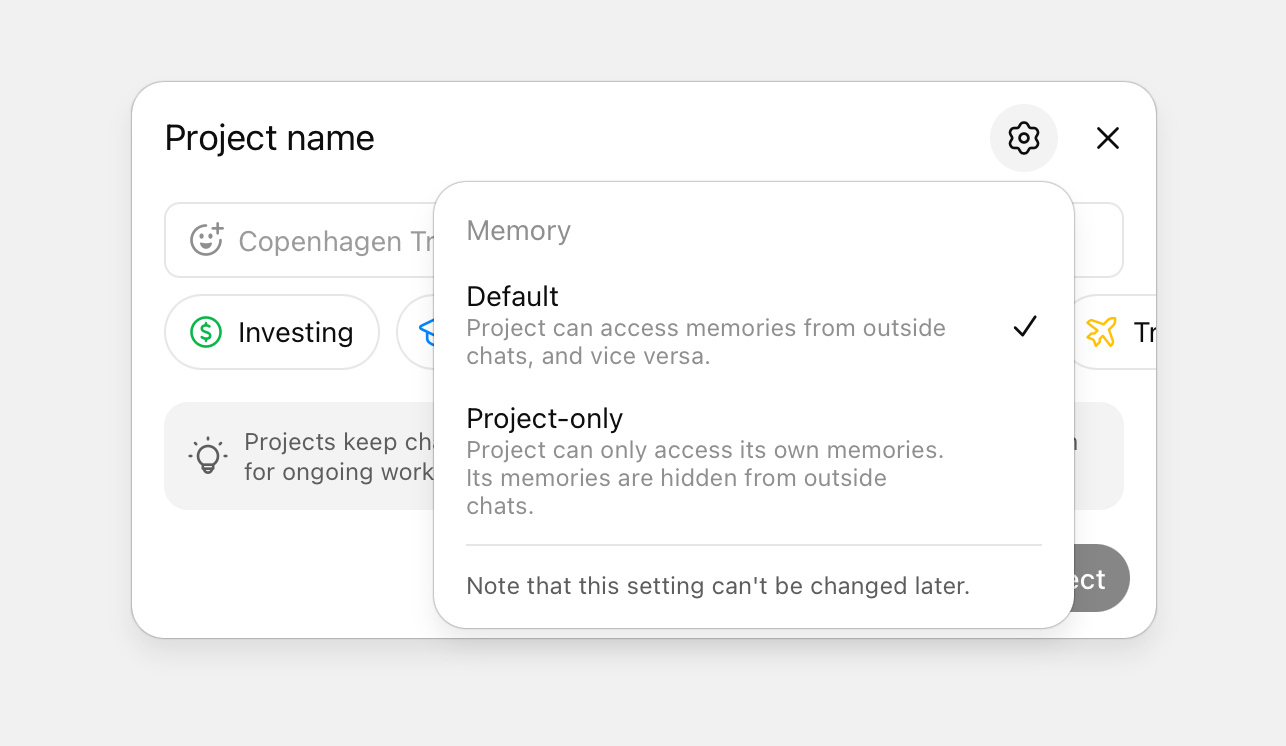

16. Isolate your project’s memory

This is a relatively new feature in ChatGPT. You can now isolate a project so that it doesn’t use the memory of your discussions outside of the project, and vice versa.

ChatGPT’s memory feature makes it more useful and personalised, but sometimes you don’t want to have it do this. When a temporary chat isn’t enough, now you have this option too.

17. Priming the LLM before you get it to do a task

Indragie Karunaratne has this great tip in his article I Shipped a macOS App Built Entirely by Claude Code:

There’s a process that I call “priming” the agent, where instead of having the agent jump straight to performing a task, I have it read additional context upfront to increase the chances that it will produce good outputs.

This makes sense intuitively and works on most tasks, like just if you took the same approach with a human. For example:

What are some best practices for analysing qualitative UX research data?

Wait for it to respond and then...

Now take a look at these transcripts I've attached and...

I’ve found this quite effective in many types of tasks, especially more complex ones.

18. Rename your chats

This is a simple one! The more you use ChatGPT (or other LLMs), the messier they get and the harder it is to find stuff that you’ve worked on previously.

The names that ChatGPT gives your conversations are so short and generic, it can be really hard to distinguish between them, especially if you have multiple similar conversations in the same project.

To solve this, rename the titles of useful conversations and use emojis as well. For example, I try to start all my deep research chats with 🔭 so that I can easily find them later.

19. In ChatGPT, output to a table rather than CSV

When you’re getting ChatGPT to do some data manipulation or analysis, it can be beneficial to get it to output to a table in the chat rather than CSV.

I find that ChatGPT can be very unreliable with its CSV exporting – it doesn’t always follow instructions, data can be truncated or contain special characters that you have to then clean up.

However, for smaller tables or outputs, if you get it to put the data in a table in the chat, it is much more reliable and then you can easily copy and paste the data with no issues. The only limitation is that tables in the chat cannot be very long.

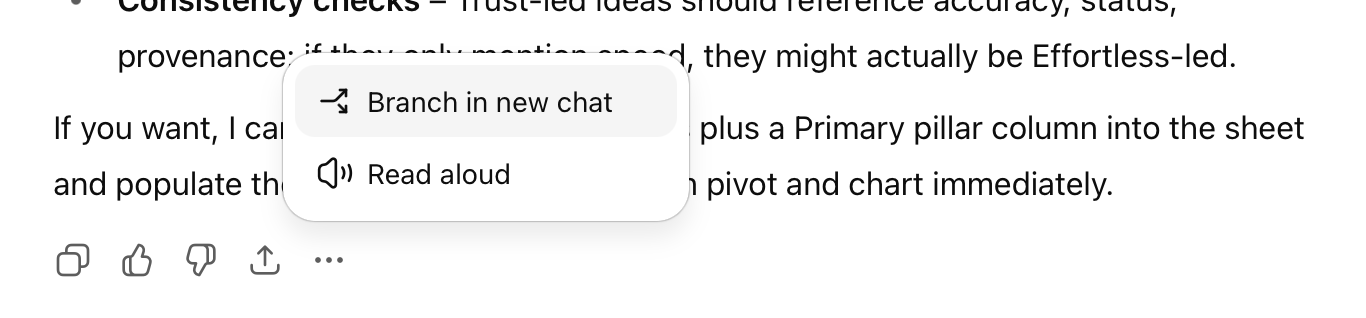

20. Branch your conversation in ChatGPT

This one is a recently added feature. You can now create a new chat from any point in a conversation, so you can take it in more than one direction from that point onwards.

For example, let’s say you prompted it to analyse a spreadsheet of data. There are probably several explorations you want to do on that data, but rather than have to repeat that first step in every conversation, you can do it once and then create a branch for each one.

The reason to do this rather than just have one very long conversation is that the longer the chat gets with an LLM, the worse the results get and the more likely you are to see hallucinations. So for any data analysis you’re doing, this feature is going to be a lot of use.