Why I don’t ask people to think aloud in evaluative research

Briefing people before they start a task leads to unintended consequences.

Asking research participants to think aloud while they complete a task has been a core method in usability testing for decades.

Book like Steve Krug’s Don’t Make Me Think and Jacob Neilsen’s Usability Engineering popularised it for digital product design and the vast majority of researchers use it in their work.

Yet the way it’s commonly taught and often used isn’t how I would recommend people use it, because it creates a number of undesirable side effects.

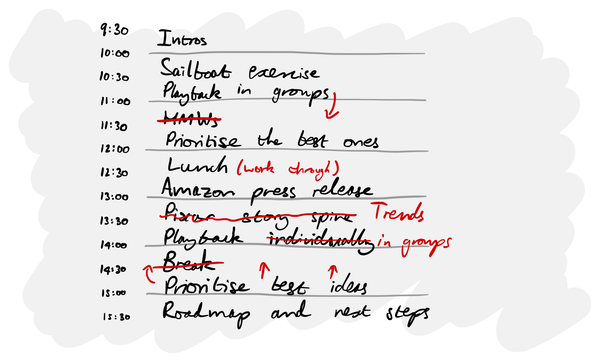

The textbook method

The standard way that think aloud is taught is that before you start your first task, you “ask test participants to use the system while continuously thinking out loud — that is, simply verbalizing their thoughts as they move through the user interface”.

Jacob Neilsen even recommends showing participants a video of what is expected before they start.

This ensures that participants are fully briefed on what to do, but this can have a few unintended consequences...

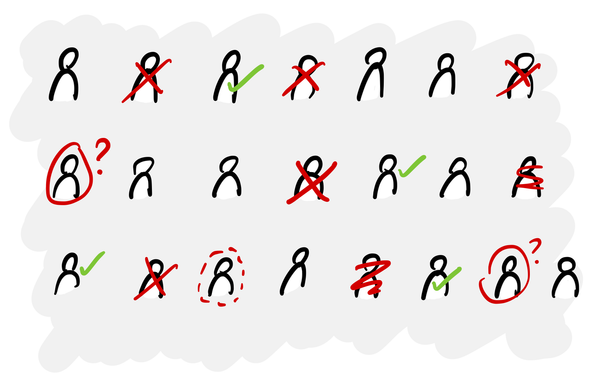

Some people take it too literally

Some participants start exaggerating their behaviour and provide too much detail: “I’m scrolling down the page and oh, there’s a red button. I’m going to click it because it’s red.”

This is not realistic.

Asking a research participant to think aloud can put them in a mindset where they start overanalysing everything they do, which is not how they would behave at home.

It’s like asking someone to go for a run but pay attention to how their feet are striking the ground – forefoot, mid-foot or heel. Because you’re thinking about something that you wouldn’t normally, you’re going to influence and compromise your natural behaviour.

Once someone is in this mindset, trying to self-analyse their every action, it can be really hard to pull them back to a more natural and realistic frame of mind.

This is a much bigger problem with unmoderated research, where you can’t spot and correct this behaviour, and some serial respondents habitually narrate in this exaggerated and unrealistic way.

It’s not natural

We try to make research as realistic as possible because we want to understand how individuals would interact with a product or service in real life.

Yet research isn’t conducted in a very natural setting to begin with: you’re already speaking to a stranger, either on a call or sat next to them.

Asking people to think aloud adds to this. It’s not something that someone would likely ever do at home and they might not have ever done it before.

Instead, only prompt them if they are quiet

I’ve conducted around 1,000-2,000 hours of research and apart from my very earliest interviews, I’ve not asked people to think aloud before they start a task.

This has worked out fine and I would argue given me better results, for two reasons:

- Because you are sat there/on a call with them, they know that you are interested in what they are doing and most will start talking on their own. Most people do not need to be briefed to alter their behaviour for your benefit – they will do it spontaneously.

- If they are quiet, you can always ask them “what are you thinking?”, “what’s going on here?” or a similar question. It’s much better to have someone who is quiet and have to prompt them, than have someone who is being very literal and overthinking everything in a performative way.

Try it out

Having someone narrate every tiny detail of what they’re doing feels like the right thing to do, and is especially useful now that AI can analyse transcripts for us, but I really don’t think that briefing people in advance to think aloud is the best way to moderate evaluative research.

When you ask someone to adopt an unnatural behaviour from the very start of a task, you risk putting them in a mindset that they would never be in at home.

Instead, let them get on with it. Use your powers of observation to notice what they are doing and how they are reacting. Trust that they will say things when something unusual or interesting happens. If they’re too quiet, there are many ways to nudge them to share more without biasing them.

If you’re not convinced, try it out in your next usability test and let me know how you get on.