Can we still trust quant surveys?

AI is enabling new levels of fraud in online research.

Last year, I was on a panel at the MRS B2B Market Research conference. During the Q&A, one of the audience members shared that in a recent quant survey project, they had to throw away 80% of responses due to fraud.

There’s always been fraud risk in online surveys. People and bots filling them in just for the money is nothing new. But AI has changed the scale of the problem dramatically.

One study found that usable response rates have dropped from 75% to just 10% in recent years. Research from Stanford found that a third of online survey takers admit to using tools like ChatGPT to answer questions. Sean Westwood, a researcher at Dartmouth, built an AI agent to take surveys and found it could evade detection 99.8% of the time. The ‘trick questions’ that researchers have relied on for years no longer work.

Three ways AI enables fraud

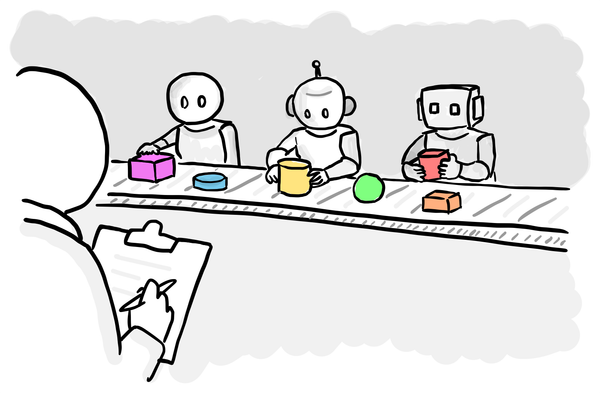

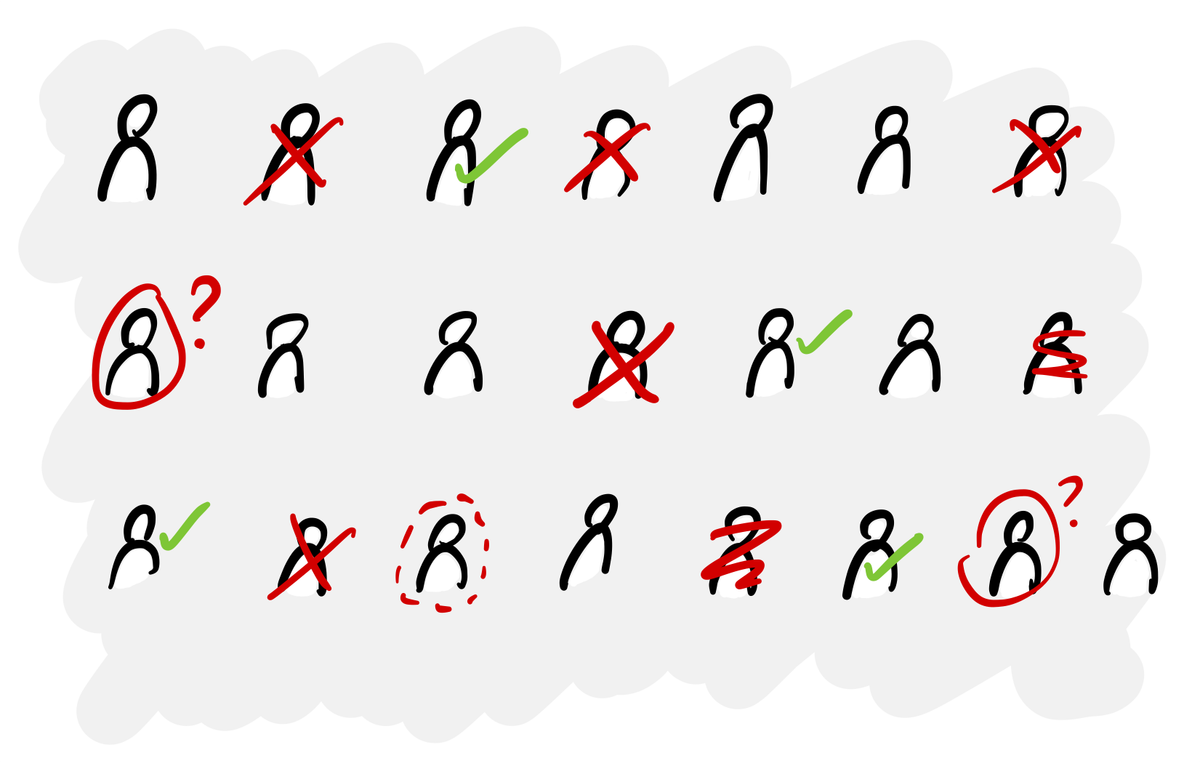

Bots and dishonest participants have always been a problem, but LLMs give bad actors powerful new tools. There are essentially three attack vectors:

- Fully automated bots. These are autonomous agents that complete surveys end-to-end. They can maintain a consistent persona, answer attention checks, generate realistic responses and simulate ‘human’ pacing with deliberate imperfections. Once configured, the reward from a successful completion can far outweigh the cost of running the model.

- Real humans using AI to answer. Here the respondent is real, but the answers aren’t. Sometimes people do it deliberately to complete surveys for the money, other times they just can’t be bothered to fill in another open text field. Here, some fraud controls may not catch it because the person’s device and identity are genuine, even if their answers aren’t.

- Misrepresentation to pass screeners. People lie about demographics, job roles or product ownership to qualify for higher-paid studies. AI can help them sound convincing and maintain consistency across a screener and main survey.

For all of these, the bigger the reward, the greater the risk. If you’re running niche B2B research with a $300 incentive for CMOs or high-net-worth individuals, this is where you need to be most careful.

What survey companies are doing

Of course the market research industry recognises this “could become an existential issue”, so an arms race is underway.

Panel companies and survey platforms are responding with layered defences: identity verification with government IDs or selfie videos, device fingerprinting, behavioural telemetry and so on. Some B2B expert networks even insist on phone calls with potential participants before including them in studies.

All of this increases the cost to recruit participants and run surveys. Now you’re not just paying more for better quality sample, you’re paying to make sure the people answering are real in the first place.

What researchers can do to reduce fraud risk

Setting up a survey has always involved writing screeners and questions in a way to reduce the chance of people guessing their way through, but now you need to do more to counter threats from AI.

- Interrogate sample quality, not just sample size. Ask providers how respondents’ identities are verified and how they prevent fraud. Think about using your budget for higher-quality sample rather than always going for the biggest possible sample.

- Ask for evidence of criteria to be collected. Traditional fieldwork agencies have been doing this for years, but it’s rarer in quant. If someone says they are a director of a company, check Companies House. If they say they drive a Jaguar, have them share their registration document. AI actually helps companies do this at scale.

- Design studies to be lower effort. In the past, if someone started to tire of your overly long survey with too many open-ended questions, they would just give up. Now they can use LLMs to cheat their way to the end. Shorter surveys with clearer questions reduce the risk that real people will reach for ChatGPT to get through your badly-designed questionnaire.

- Use mixed methods. I know this isn’t anything new, but the greater risk of fraud in surveys means it makes even more sense to use more than one method to answer any question. Triangulate what you’re seeing with other data, including qual research.

- Source from your own customers where possible. A survey on your website or in your app gives you much more confidence than a general population panel. At least you know people had to be using your product in the first place.

- Consider having no incentive. It’s the money that attracts the fraud, so try removing it. One benefit of the previous point is that if you have something straightforward to ask, try popping it up on your site before committing to a full paid study.

How to make decisions about when to use online surveys

If quant carries greater risks than before, then you need to be thoughtful about when and how to use it, depending on what you’re using it for:

- For exploratory work like early concept testing or rough prioritisation, online panels with strong QA are still defensible. But treat numbers as directional rather than the perfect truth.

- For operational decisions like roadmap prioritisation or tracking satisfaction over time, you need better sample and consistency checks across waves to keep it valid.

- For high-stakes decisions like market sizing or major business decisions, you need verification-heavy surveys backed up with mixed-methods to reduce the risk.

If you can’t afford the quality needed for the bigger decisions, you may be better off doing less quant, more qual and more behavioural evidence than doing cheap quant that produces questionable results.

Think: if 10% of responses were compromised, would it change the outcome of your research? If yes, you need either higher quality, mixed methods, or both.

Another tool in the toolbox, not the perfect truth machine

Online surveys aren’t ‘dead’ or useless, but the days of assuming that quant’s bigger numbers will always give you greater certainty are over.

You asked lots of people a set of questions... but are they real people? Did they really answer themselves or did they get a little help from ChatGPT? Are they really the type of people you thought they were? There has never been so much uncertainty about who (or what) is behind the other side of your survey.

Yet despite this, it’s not like we’re going to go back to in-person qual to answer every question. It just doesn’t scale. Imperfect as they are, online surveys are here to stay. We just have to be more thoughtful about how we use them and conscious of how they can be compromised to get value from them.