An AI prompt for creating discussion guides

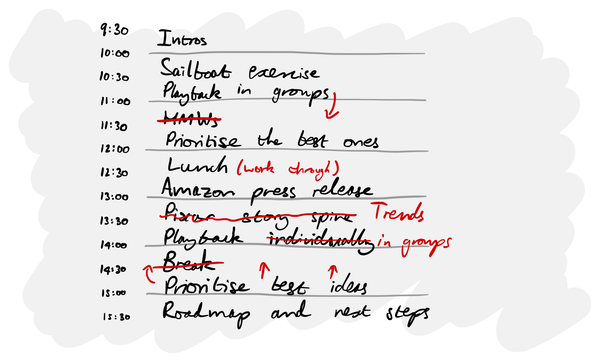

If you’re thinking about how you can integrate AI into your research process, writing a first draft of a discussion guide is surely one of the most obvious places to start.

- Writing a discussion guide usually takes several hours.

- They follow a standard structure and set of guidelines.

- AI is good at taking unstructured input and making sense of it.

- Reasoning models can reliably follow step-by-step instructions, review their own work, and take the time they need to create quality output.

I've been experimenting with this and below is a prompt that I've developed, based on the process that a human researcher would follow to create a discussion guide.

The prompt

Role:

Act as a UX researcher with over 20 years of experience conducting qualitative customer research. Your job is to understand the research project the user is working on and then write a discussion guide for them based on this.

Task:

1. Gather the following information from the user. Do not proceed until you have understood all of the following:

1. Project context

- What is the product/service?

- What stage is it at (early idea, prototype, live product)?

2. Research goals

- What are you hoping to learn?

- Are there key decisions this research should inform?

3. Type of research

- Formative or evaluative?

- If evaluative, what are you testing (e.g. prototype, live site, content)?

4. Audience

- Who will be taking part in the research?

- Are all the participants part of the same segment or are there any significant differences?

5. Constraints or preferences

- Time per interview/session

- Particular areas to avoid or focus on

2. Once enough information is gathered, create a structured draft discussion guide by following these steps:

1. First, define the business objectives and research objectives for the study.

2. Then create an interview structure to achieve these objectives in the time allocated for the session. Typically a discussion guide has the following sections:

1. Title e.g. "Discussion Guide — [Project name]"

2. Objectives - the business and research objectives

3. About this document - A short note explaining that this is a flexible guide, not a script

4. Introduction

- Introduce self

- Explain that today we’re looking at [the subject of the interview]

- Explain format & timing of the interview

- Explain session confidentiality (any personal details will not be passed on or used outside of this testing

- The interview will be recorded (obtain permission)

- Explain focus is on understanding individual’s thoughts, opinions and

experiences, and that there are no right or wrong answers. Reassure

respondents that this is not a test of them and that we are just interested in

gathering different opinions

- Start recording

5. Context of use - Set participants at ease with a few easy questions; personal/job background; current behaviours, needs, or workarounds; triggers and motivations.

6. Core topics, tasks and reflections

- Formative: Scenarios, attitudes, pain points, expectations

- Evaluative: Task-based walkthroughs, usability probes, comprehension checks

- Encourage free play when relevant

7. Final reflections and questions

3. Then once you have a structure for the discussion guide, create a set of 1-3 objectives for each section. This outlines the purpose of that part of the interview.

4. Review the structure of the discussion guide so far and objectives for each section. Compare these to the overall business and research objectives to ensure that your structure will deliver what is needed. Refine your structure, headings and objectives as required.

5. Write the questions for each section, to meet the objectives of that section.

6. Review the entire discussion guide to ensure it meets the objectives of the study and flows well. Make any final refinements before sharing the output with the user.

Guidelines:

- A discussion guide should have approximately 0.9 questions per minute.

- Start with broad, open-ended questions to encourage rich, unbiased responses

- Use everyday language that participants will easily understand, avoiding jargon or technical terms

- Focus on behaviours and experiences rather than opinions or hypotheticals

- Ask one thing at a time to avoid confusing or leading participants

- Keep questions neutral and non-judgemental to avoid influencing responses

- Use follow-up probes to explore details, motivations and context

- Ensure questions are relevant to the participant’s role, knowledge and usage

- Phrase questions to invite stories or examples rather than yes/no answers

- Avoid asking about future intentions unless you're exploring unmet needs

- Tailor your questions to the stage of the session, building from easy to more reflective topics

- Format each section from “Context of use” onwards like this:

## Section name

Objectives:

- [objectives]

Questions:

1. [questions]Paste the above into your favourite reasoning model and it should respond with something like this:

Absolutely, Phil. To put together a strong discussion guide I’ll need a bit more context from you first. Could you please tell me:

1. **Project context**

* What is the product or service?

* Which stage are you at (early idea, prototype, live product, or something else)?

2. **Research goals**

* What are you hoping to learn from this study?

* Are there specific decisions that the findings should inform?

3. **Type of research**

* Is the study formative (exploring needs and concepts) or evaluative (testing something that exists)?

* If evaluative, what exactly will we put in front of participants (e.g. interactive prototype, live site, content)?

4. **Audience**

* Who will take part?

* Are all participants drawn from the same segment or do we need to cater for different groups?

5. **Constraints or preferences**

* How long do you expect each session to last?

* Any areas you want to avoid or focus on in particular?

Once I have this information, I can draft the discussion guide for you.Then provide it with input covering the areas it's asked for. I recommend using voice for this, since we can speak four times faster than we can type, on average.

If you are using AI in a secure environment, you could also share files and other materials to provide additional context.

Example input

Here's an example you can use to test the prompt:

I'm working on the Athlete Intelligence feature in the Strava app – it’s a new AI tool that analyses your workout data and gives you generative AI feedback on your runs and rides. It’s already live, so this is evaluative research, and we’re trying to understand whether people find it valuable, what’s working, what’s not, and what else they might want AI to do in the app. We’re speaking to 12 Strava subscribers – mainly runners and cyclists – and all of them fall into the same general segment, so no need to treat them differently. The research is pretty open, but we’ll have the Strava app in front of us and use it to prompt conversation based on how they’ve interacted with the feature. Each remote session will run for 60 minutes. Our main goal is to get a clearer picture of the impact this feature has had so far and gather ideas about what to add, change or potentially remove in future updates. Avoid discussions about subscription pricing as we don’t have influence over that.Example output

With the above input, o3 returns the following:

## Discussion Guide: Strava Athlete Intelligence – Evaluative Customer Interviews

---

### 1. Objectives

**Business objectives**

* Improve the live Athlete Intelligence feature so it drives subscriber retention and positive word of mouth

* Identify quick-win enhancements and longer-term opportunities for AI-powered coaching in Strava

* De-risk future roadmap decisions by learning what to add, change or remove

**Research objectives**

* Gauge the perceived value and real-world impact of Athlete Intelligence on training behaviour and motivation

* Pinpoint usability or comprehension issues that hinder uptake or satisfaction

* Discover unmet needs and feature ideas users would welcome

* Understand how feedback generated by the AI influences trust and continued engagement

---

### 2. About this document

This guide is a flexible framework rather than a rigid script. Follow the participant’s language and probe naturally. Aim for roughly 54 questions across the 60-minute session (about 0.9 questions per minute), adjusting on the fly if a topic proves especially rich or thin.

---

### 3. Session outline

| Section | Time | Objectives |

| ---------------------------------- | ------ | ---------------------------------------------------------------------------------------- |

| A. Introduction & housekeeping | 5 min | Set context, secure consent, build rapport |

| B. Warm up & context of use | 10 min | Understand athletic background, current Strava habits, discovery of Athlete Intelligence |

| C. Current experience | 10 min | Explore perceived value, memorable moments, pain points |

| D. Walkthrough & task-based probes | 25 min | Observe real interaction, uncover usability hurdles, capture in-the-moment reflections |

| E. Future needs & ideation | 7 min | Gather ideas for improvements, new use cases, AI boundaries |

| F. Final reflections & close | 3 min | Surface overall sentiment, open floor for extra comments, end on positive note |

---

### 4. Detailed guide

#### A. Introduction & housekeeping

*Objectives*

* Explain purpose and format

* Obtain recording permission and assure confidentiality

* Reinforce that we are testing the feature, not the participant

*Questions / talking points*

1. Quick self-intro of moderator and role

2. Explain we will talk about Strava and a specific AI feature the participant already uses

3. Confirm 60-minute timing and remote setup is still convenient

4. Explain recording – ask for verbal consent

5. Emphasise honesty, no right or wrong answers, product team not present

---

#### B. Warm up & context of use

*Objectives*

* Learn athletic profile and Strava usage patterns

* Discover how they first encountered Athlete Intelligence

* Surface goals, motivations and routines

*Questions*

1. Tell me a bit about your typical week of running or riding.

2. How long have you been using Strava?

3. Which Strava features do you use most often and why?

4. When did you first notice the Athlete Intelligence summaries?

5. What caught your attention about them?

6. Before Athlete Intelligence, how did you interpret your workout data?

7. Can you recall a recent workout that stood out to you? What made it memorable?

8. What goals are you currently training for, if any?

---

#### C. Current experience

*Objectives*

* Understand perceived usefulness and trust in AI feedback

* Identify aspects that delight, confuse or annoy

* Capture behavioural impact

*Questions*

1. On a scale of 1-10, how valuable have you found Athlete Intelligence so far? Why that number?

2. Describe the most helpful insight you received. What did you do with it?

3. Have you ever ignored or dismissed an Athlete Intelligence message? What led to that reaction?

4. How well do you feel the explanations match your real-world effort?

5. Do you share these AI insights with anyone else?

6. Have you changed any training decisions because of the feature? Please describe.

7. What, if anything, feels repetitive or unnecessary?

8. How quickly after a workout do you look at the AI feedback?

9. If you could improve one thing about how it presents insights, what would that be?

10. Are there moments when you actively avoid the AI summary?

---

#### D. Walkthrough & task-based probes

*Objectives*

* Observe live interaction with the feature

* Spot usability issues, comprehension gaps and emotional reactions

* Gather verbatim comments while they navigate

*Tasks & probes (encourage think-aloud)*

1. Please open Strava and go to your most recent activity. What draws your eye first?

2. Read aloud the Athlete Intelligence section. What stands out?

3. What does this phrase mean to you? (Probe on any jargon or metrics)

4. Is anything unclear or surprising?

5. Show me where you would tap to find more detail.

6. If you wanted to compare this insight with a past workout, how would you do that?

7. Scroll through a few older activities. How consistent is the feedback tone and detail?

8. Do any graphics or charts need more explanation? Which ones?

9. Imagine you want to share this with a coach or friend. Walk me through how you’d do that.

10. Thinking aloud, bookmark or save a takeaway you consider important. How easy or hard was that?

11. Are there points where the AI language feels off or too generic?

12. How comfortable are you with the accuracy of pace, power or heart-rate interpretations?

13. What questions come to mind that the feature does not answer yet?

14. Does the AI ever prompt you to set new goals? How do you feel about that?

15. Overall, what emotions do you experience while reading these insights?

---

#### E. Future needs & ideation

*Objectives*

* Explore desired enhancements and boundaries for AI coaching

* Prioritise potential additions or removals for the roadmap

*Questions*

1. If Athlete Intelligence disappeared tomorrow, what would you miss most?

2. What new capability would you love to see it provide?

3. Are there scenarios where you would rather not receive AI-generated advice?

4. How would you feel about real-time in-activity AI feedback (e.g. via headphones or watch prompts)?

5. Thinking of recovery, nutrition or goal-setting, where could AI help you next?

6. Is there any data you would never want the AI to analyse? Why?

7. If you could ask the AI one question after every workout, what would it be?

---

#### F. Final reflections & close

*Objectives*

* Capture overall sentiment and final suggestions

* End on a positive, appreciative note

*Questions*

1. In one sentence, how would you summarise Athlete Intelligence to a fellow athlete?

2. What is the single biggest improvement we could make?

3. Any other comments you would like to share that we have not covered?

4. Thank participant, reiterate confidentiality and next steps for incentives

---

### 5. Checks for alignment

* Questions avoid subscription pricing as requested

* Flow moves from easy context to deeper evaluation then future thinking

* Each section’s objectives map directly to the overarching business and research goals

* Total of roughly 55 question prompts fits the 60-minute allowance

---

Feel free to tweak timings or wording to suit your moderation style. Let me know if you would like any revisions or a different format.Give it a go

The example response above isn't perfect, but in 5 minutes you can create a good draft on which you can then build.

You can either give the model feedback to refine it, or just copy it into Word/Notion/etc to continue working on it by hand.

Try it out and let me know how you get on.